unhosted web apps

freedom from web 2.0's monopoly platforms

1. Personal servers and unhosted web apps

Hosted software threatens software freedom

The Linux operating system and the Firefox browser have an important thing in common: they are free software products. They are, in a sense, owned by humankind as a whole. Both have played an important role in solving the situation that existed in the nineties, where it had become pretty much impossible to create or use software without paying tribute to the Microsoft monopoly which held everything in its grip.

The possibility to freely create, improve, share, and use software is called Software Freedom. It is very much comparable to for instance Freedom of Press. And as software becomes ever more important in our society, so does Software Freedom. We have come a long way since the free software movement was started, but in the last five or ten years, a new threat has come up: hosted software.

When you use your computer, some software runs on your computer, but you are also using software that runs on servers. Servers are computers just like the ones we use every day, but usually racked into large air-conditioned rooms, and stripped of their screen and keyboard, so their only interface is a network cable. Whenever you "go online", your computer is communicating over the internet with one or more of these servers, which produce the things you see as websites. Unfortunately, most servers are controlled by powerful companies like Google, Facebook and Apple, and these companies have full control over most things that happen online.

This means that even though you can run Linux and Firefox on your own computer, humankind is still not free to create, improve, share and use just any software. In almost everything we do with our computers, we have to go through the companies that control the hosted software that currently occupies most central parts of our global infrastructure. The companies themselves are not to blame for this, they are inanimate entities whose aim it is to make money by offering attractive products. What is failing are the non-commercial alternatives to their services.

One server per human

The solution to the control of hosted software over our infrastructure is quite simple: we have to decentralize the power. Just like freedom of press can be achieved by giving people the tools to print and distribute underground pamphlets, we can give people their freedom of software back by teaching them to control their own server.

Building an operating system like Linux, or a browser like Firefox is quite hard, and it took some of the world's best software engineers many years to achieve this. But building a web server which each human can choose to use for their online software needs is not hard at all: from an engineering perspective, it is a solved problem. The real challenge is organizational.

The network effect

A lot of people are actually working on software for "personal servers" like this. Many of them already have functional products. You can go to one of these projects right now, follow the install instructions for their personal server software, and you will have your own personal server, independent from any of the hosted software monopolies. But there are several reasons why not many people do this yet. They all have to do with the network effect.

First, there is the spreading of effort, thinned out across many unrelated projects. But this has not stopped most of these projects from already publishing something that works. So let's say you choose the personal server software from project X, you install it, and run it.

Then we get to the second problem: if I happen to run the software from project Y, and I now want to communicate with you, then this will only work if these two projects have taken the effort of making their products compatible with each other.

Luckily, again, in practice most of these personal server projects are aware of this need, and cooperate to develop standard protocols that describe how servers should interact with each other. But these protocols often cover only a portion of the functionality that these personal servers have, and also, they often lead to a lot of discussion and alternative versions. This means that there are now effectively groups of personal server projects. So your server and my server no longer have to run exactly the same software in order to understand each other, but their software now has to be from the same group of projects.

But the fourth problem is probably bigger than all the previous ones put together: since at present only early adopters run their own servers, chances are that you want to communicate with the majority of other people, who are (still) using the hosted software platforms from web 2.0's big monopolies. With the notable exception of Friendica, most personal server projects do not really provide a way to switch to them without your friends also switching to a server from the same group of projects.

Simple servers plus unhosted apps

So how can we solve all those problems? There are too many different applications to choose from, and we have reached a situation where choosing which application you want to use dictates, through all these manifestations of the network effect, which people you will be able to communicate with. The answer, or at least the answer we propose in this blog series, is to move the application out of the server, and into the browser.

Browsers are now very powerful environments, not just for viewing web pages, but actually for running entire software applications. We call these "unhosted web applications", because they are not hosted on a server, yet they are still web applications, and not desktop applications, because they are written in html, css and javascript, and they can only run inside a browser's execution environment.

Since we move all the application features out of the personal server and into the browser, the actual personal server becomes very simple. It is basically reduced to a gateway that can broker connections between unhosted web apps and the outside world. And if an incoming message arrives while the user is not online with any unhosted web app, it can queue it up and keep it safe until the next time the user connects.

Unhosted web apps also lack a good place to store valuable user data, and servers are good for that. So apart from brokering our connections with the outside world, the server can also function as cloud storage for unhosted web apps. This makes sure your data is safe if your device is broken or lost, and also lets you easily synchronize data between multiple devices. We developed the remoteStorage protocol for this, which we recently submitted as an IETF Internet Draft.

The unhosted web apps we use can be independent of our personal server. They can come from any trusted source, and can be running in our browser without the need to choose a specific application at the time of choosing and installing the personal server software. This separation of concerns is what ultimately allows people to freely choose:

- Which personal server you run

- Which application you want to use today

- Who you interact with

No Cookie Crew

This blog is the official handbook of a group of early adopters of unhosted web apps called the No Cookie Crew. We call ourselves that because we have disabled all cookies in our browser, including first-party cookies. We configure our browser to only make an exception for a handful of "second party" cookies. To give an example: if you publish something "on" Twitter, then Twitter acts as a third party, because they, as a company, are not the intended audience. But if you send a user support question to Twitter, then you are communicating "with" them, as a second party. Other examples of second-party interactions are online banking, online check-in at an airline website, or buying an ebook from Amazon. But if you are logging in to eBay to buy an electric guitar from a guitar dealer, then eBay is more a third party in that interaction.

There are situations where going through a third party is unavoidable, for instance if a certain guitar dealer only sells on eBay, or a certain friend of yours only posts on Facebook. For each of these "worlds", we will develop application-agnostic gateway modules that we can add to our personal server. Usually this will require having a "puppet" account in that world, which this gateway module can control. Sometimes, it is necessary to use an API key before your personal server can connect to control your "puppet", but these are usually easy to obtain. To the people in each world, your puppet will look just like any other user account in there. But the gateway module on your personal server allows unhosted web apps to control it, and to "see" what it sees.

In no case will we force other people to change how they communicate with us. Part of the goal of this exercise is to show that it is possible to completely log out of web 2.0 without having to lose contact with anybody who stays behind.

To add to the fun, we also disabled Flash, Quicktime, and other proprietary plugins, and will not use any desktop apps.

Whenever we have to violate these rules, we make a note of it, and try to learn from it. And before you ask, at this moment, the No Cookie Crew still has only one member: me. :) Logging out of web 2.0 is still pretty much a full-time occupation, because most things you do require a script that you have to write, upload and run first. But if that sounds like fun to you, you are very much invited to join! Otherwise, you can also just watch from a distance by following this blog.

This blog

Each blog post in this series will be written like a tutorial, including code snippets, so that you can follow along with the unhosted web apps and personal server tools in your own browser and on your own server. We will mainly be using nodejs on the server-side. We will publish one episode every Tuesday. As we build up material and discuss various topics, we will be building up the official Handbook of the No Cookie Crew. Hopefully, any web enthusiast, even if you haven't taken the leap yet to join the No Cookie Crew, will find many relevant and diverse topics discussed here, and food for thought. We will start off next week by building an unhosted text editor from scratch. It is the editor I am using right now to write this, and it is indeed an unhosted web app: it is not hosted on any server.

For two years already our research on unhosted web apps is fully funded by donations from many awesome and generous people, as well as several companies and foundations. This blog series is a write-up of all the tricks and insights we discovered so far.

2. An unhosted editor

Welcome to the second episode of Unhosted Adventures! If you log out of all websites, and close all desktop applications, putting your browser fullscreen, you will not be able to do many things. In order to get some work done, you will most likely need at least a text editor. So that is the first thing we will build. We will use CodeMirror as the basis. This is a client-side text editor component, developed by Marijn Haverbeke, which can easily be embedded in web apps, whether hosted or unhosted. But since it requires no hosted code, it is especially useful for use in unhosted web apps. The following code allows you to edit javascript, html and markdown right inside your browser:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="utf-8">

<title>codemirror</title>

<script

src="http://codemirror.net/lib/codemirror.js">

</script>

<link rel="stylesheet"

href="http://codemirror.net/lib/codemirror.css" />

<script

src="http://codemirror.net/mode/xml/xml.js">

</script>

<script

src="http://codemirror.net/mode/javascript/javascript.js">

</script>

<script

src="http://codemirror.net/mode/css/css.js">

</script>

<script

src="http://codemirror.net/mode/htmlmixed/htmlmixed.js">

</script>

<script

src="http://codemirror.net/mode/markdown/markdown.js">

</script>

</head>

<body>

<div id="editor"></div>

<div>

<input type="submit" value="js"

onclick="myCodeMirror.setOption('mode', 'javascript');">

<input type="submit" value="html"

onclick="myCodeMirror.setOption('mode', 'htmlmixed');">

<input type="submit" value="markdown"

onclick="myCodeMirror.setOption('mode', 'markdown');">

</div>

</body>

<script>

var myCodeMirror = CodeMirror(

document.getElementById('editor'),

{ lineNumbers: true }

);

</script>

</html>

Funny fact is, I wrote this example without having an editor. The way I did it was to visit example.com, and then open the web console and type

document.body.innerHTML = ''

to clear the screen. That way I could use the console to add code to the page, and bootstrap myself into my first editor, which I could then save to a file with "Save Page As" from the "File" menu. Once I had it displaying a CodeMirror component, I could start using that to type in, and from there write more editor versions and then other things. But since I already went through that first bootstrap phase, you don't have to and can simply use this data url (in Firefox you may have to click the small "shield" icon to unblock the content, or copy the link location and paste it into another tab's URL bar). Type some markdown or javascript in there to try out what the different syntax highlightings look like. You can bookmark this data URL, or click 'Save Page As...' from the 'File' menu of your browser. Then you can browse to your device's file system by typing "file:///" into the address bar, and clicking through the directories until you find the file you saved. To view the source code, an easy trick is to replace the first part of the data URL:

data:text/html;charset=utf-8,

with:

data:text/plain;charset=utf-8,

Also fun is opening the web console while viewing the editor (Ctrl-Shift-K, Cmd-Alt-K, Ctrl-Shift-I, or Cmd-Alt-I depending on your OS and browser), and pasting the following line in there:

myCodeMirror.setValue(decodeURIComponent(location.href

.substring('data:text/html;charset=utf-8,'.length)));

This will decode the data URL and load the editor into itself. Now you can edit your editor. :) To update it, use the "opposite" line, which will take you to the new version you just created:

location.href = 'data:text/html;charset=utf-8,'

+ encodeURIComponent(myCodeMirror.getValue());

Try it, by adding some text at the end of the document body and executing that second line. After that you can execute the first line again to load your modified version of the editor back into itself (use the up-arrow to see your command history in the web console).

The next step is to add some buttons to make loading and saving the files you edit from and to the local filesystem easier. For that, we add the following code into the document body:

<input type="file" onchange="localLoad(this.files);" />

<input type="submit" value="save"

onclick="window.open(localSave());">

And we add the following functions to the script tag at the end:

//LOCAL

function localSave() {

return 'data:text/plain;charset=utf-8,'

+ encodeURIComponent(myCodeMirror.getValue());

}

function localLoad(files) {

if (files.length === 1) {

document.title = escape(files[0].name);

var reader = new FileReader();

reader.onload = function(e) {

myCodeMirror.setValue(e.target.result);

};

reader.readAsText(files[0]);

}

}

The 'localLoad' code is copied from MDN; you should also check out the html5rocks tutorial, which describes how you can add nice drag-and-drop. But since I use no desktop applications, I usually have Firefox fullscreen and have no other place to drag any items from, so that is useless in my case.

The 'save' button is not ideal. It opens the editor contents in a new window so that you can save it with "Save Page As...", but that dialog then is not prefilled with the file path to which you saved the file the last time, and also it asks you to confirm if you really want to replace the file, and you need to close the extra window again, meaning saving the current file takes five clicks instead of one. But it is the best I could get working. The reason the window.open() call is in the button element and not in a function as it would normally be, is that popup blockers may block that if it is too deep in the call stack.

UPDATE: nowadays, you can use the download attribute to do this in a much nicer way. At the time of writing, that still only existed as a Chrome feature; it arrived in Firefox in spring 2013. Thanks to Felix for pointing this out!

You may have noticed that these examples include some js and css files from http://codemirror.net/ which don't load if you have no network connection. So here is the full code of this tutorial, with all the CodeMirror files copied into it from version 2.34: unhosted editor. So by bookmarking that, or saving it to your filesystem, you will always be able to edit files in your browser, whether online or offline. I am using this editor right now to write this blogpost, and I will use it to write all other apps, scripts and texts that come up in the coming weeks. It is a good idea to save one working version which you don't touch, so that if you break your editor while editing it, you have a way to bootstrap back into your editor-editing world. :)

That's it for this week. We have created the first unhosted web app of this blog series, and we will be using it as the development environment to create all other apps, as well as all server-side scripts in the coming episodes. I hope you liked it. If you did, then please share it with your friends and followers. Next week we'll discuss how to set up your own personal server.

3. Setting up your personal server

Why you need a server

Last week we created an unhosted javascript/html/markdown editor that can run on a data URL, create other apps as data URLs, load files from the local file system, and also save them again. This doesn't however allow us to communicate with other devices, nor with other users, let alone people in other "web 2.0 worlds". At some point we will want to send and receive emails and chat messages, so to do those thing with an unhosted web app, we need a sort of proxy server.

There are three important restrictions of unhosted web apps compared to hosted web apps:

- they are not addressable from the outside. Even if your device has its own static IP address, the application instance (for instance the editor we created last week) is running in one of the tabs of one of your browsers, and does not have the possibility to open any ports that listen externally on the internet address of your device.

- the data they store is emprisoned in one specific device, which is not practical if you use multiple devices, or if your device is damaged, lost or stolen.

- they cannot run when you have your device switched off, and cannot receive data when your device is not online, or on a low-bandwidth connection.

For these reasons, you cannot run your online life from unhosted web apps alone. You will need a personal server on the web. To run your own personal server on the web, there are initially four components you need to procure:

- a virtual private server (VPS),

- a domain name registration (DNR),

- a zone record at a domain name service (DNS), and

- a secure server certificate (TLS).

Shopping for the bits and bobs

The VPS is the most expensive one, and will cost you about 15 US dollars a month from for instance Rackspace. The domain name will cost you about 10 US dollars per year from for instance Gandi, and the TLS certificate you can get for free from StartCom, or for very little money from other suppliers as well. You could in theory set up your DNS hosting on your VPS, but usually your domain name registration will include free DNS hosting.

Unless you already have a domain name, you need to make an important choice at this point, namely choosing the domain name that will become your personal identity on the web. Most people have the same username in different web 2.0 worlds, for instance I often use 'michielbdejong'. When I decided it was time for me to create my own Indie Web presence, and went looking for a domain name a few months ago, 'michielbdejong.com' was still free, so I picked that. Likewise, you can probably find some domain name that is in some way related to usernames you already use elsewhere.

I registered my TLS certificate for 'apps.michielbdejong.com' because I aim to run an apps dashboard on there at some point, but you could also go for 'www.' or 'blog.' or whichever subdomain tickles your fancy. In any case, you get one subdomain plus the root domain for free, plus as many extra origins as you want on ports other than 443. So you cannot create endless subdomains, but you can host different kinds of content in subdirectories (like this blog that is hosted on /adventures on the root domain of unhosted.org), and you can get for instance https://michielbdejong.com:10001/ as an isolated javascript origin that will work on the same IP address and TLS certificate.

No Cookie Crew - Warning #1: Last week's tutorial could be followed entirely with cookies disabled. I am probably still the only person in the world who has all cookies disabled, but in case readers of this blog want to join the "No Cookie Crew", I will start indicating clearly where violations are required. Although Rackspace worked without cookies at the time of writing (they used URL-based sessions over https at the time, not anymore), StartCom uses client-side certificates in their wizard and other services are also likely to require you to white-list their cookies. In any case, you need to acquire three actual products here, which means you will need to white-list cookies from second-party e-commerce applications, and also corresponding control-panel applications which are hosted by each vendor. So that in itself is not a violation, but you will also probably need to use some desktop or hosted application to confirm your email address while signing up, and you will need something like an ssh and scp client for the next steps.

First run

Once you have your server running, point your domain name to it, and upload your TLS certificate to it. The first thing you always want to do when you ssh into a new server is to update it. On Debian this is done by typing:

apt-get update

apt-get upgrade

There are several ways to run a server, but here we will use nodejs, because it's fun and powerful. So follow the instructions on the nodejs website to install it onto your server. Nodejs comes with the 'node' executable that lets you execute javascript programs, as well as the 'npm' package manager, that gives you access to a whole universe of very useful high-quality libraries.

Your webserver

Once you have node working, you can use the example from the nodejitsu docs to set up your website; adapted here to make it serve your website over https:

var static = require('node-static'),

http = require('http'),

https = require('https'),

fs = require('fs'),

config = {

contentDir: '/var/www',

tlsDir: '/root/tls'

};

http.createServer(function(req, res) {

var domain = req.headers.host;

req.on('end', function() {

res.writeHead(302, {

Location: 'https://'+domain+'/'+req.url.substring(1),

'Access-Control-Allow-Origin': '*'

});

res.end('Location: https://'+domain+'/'+req.url.substring(1));

});

}).listen(80);

var file = new(static.Server)(config.contentDir, {

headers: {

'Access-Control-Allow-Origin': '*'

}

});

https.createServer({

key: fs.readFileSync(config.tlsDir+'/tls.key'),

cert: fs.readFileSync(config.tlsDir+'/tls.cert'),

ca: fs.readFileSync(config.tlsDir+'/ca.pem')

}, function(req, res) {

file.serve(req, res);

}).listen(443);

Here, tls.key and tls.cert are the secret and public parts of your TLS certificate, and the ca.pem is an extra StartCom chain certificate that you will need if you use a StartCom certificate.

Note that we also set up an http website, that redirects to your https website, and we have added CORS headers everywhere, to do our bit in helping break down the web's "Same Origin" walls.

Using 'forever' to start and stop server processes

Now that you have a script for a http server and a https server, upload them to your server, and then run:

npm install node-static

npm install -g forever

forever start path/to/myWebServer.js

forever list

If all went well, you will now have a new log file in ~/.forever/. Whenever it gets too long, you can issue 'echo > ~/.forever/abcd.log' to truncate the log of forever process 'abcd'.

You can test that your http server redirects to your https website, and you can add some basic placeholder information to the data directory, or copy and paste your profile page from one of your existing web 2.0 identities onto there.

File sharing

One advantage of having your own website with support for TLS is that you can host files for other people there, and as long as your web server does not provide a way to find the file without knowing its URL, only people who know or guess a file's link, and people who have access to your server, will be able to retrieve the file. This includes employees of your VPS providers, as well as employees of any governments who exert power over that provider.

In theory, employees of your TLS certificate provider can also get access if they have man-in-the-middle access to your TCP traffic or on that of the person retrieving the file, or if they manage to poison DNS for your domain name, but that seem very remote possibilities, so if you trust your VPS provider and its government, or you host your server under your own physical control, and you have a good way to generate a URL that nobody will guess, then this is a pretty feasible way to send a file to someone. If you implement it without bugs, then it would be more secure than standard unencrypted email, for instance.

In the other direction, you can also let other people send files to you; just run something like the following script on your server:

var https = require('https'),

fs = require('fs'),

config = {

tlsDir: '/root/tls',

uploadDir: '/root/uploads',

port: 3000

},

formidable = require('formidable'),

https.createServer({

key: fs.readFileSync(config.tlsDir+'/tls.key'),

cert: fs.readFileSync(config.tlsDir+'/tls.cert'),

ca: fs.readFileSync(config.tlsDir+'/ca.pem')

}, function(req, res) {

var form = new formidable.IncomingForm();

form.uploadDir = config.uploadDir;

form.parse(req, function(err, fields, files) {

res.writeHead(200, {'content-type': 'text/plain'});

res.end('upload received, thank you!\n');

});

}).listen(config.port);

and allow people to post files to it with an html form like this:

<form action="https://example.com:3000/"

enctype="multipart/form-data" method="post">

<input type="file" name="datafile" size="40">

<input type="submit" value="Send">

</form>

Indie Web

If you followed this episode, then you will have spent maybe 10 or 20 dollars, and probably about one working day working out how to fit all the pieces together and get it working, but you will have made a big leap in terms of your technological freedom: you are now a member of the "Indie Web" - the small guard of people who run their own webserver, independently from any big platforms. It's a bit like musicians who publish their own vinyls on small independent record labels. :) And it's definitely something you can be proud of. Now that it's up and running, add some nice pages to your website by simply uploading html files to your server.

From now on you will need your personal server in pretty much all coming episodes. For instance, next week we will explore a young but very powerful web technology, which will form an important basis for all communications between your personal server and the unhosted web apps you will be developing: WebSockets. And the week after that, we will connect your Indie Web server to Facebook and Twitter... exciting! :) Stay tuned, follow us, and spread the word: atom, mailing list, irc channel, twitter, facebook

4. WebSockets

No Cookie Crew - Warning #2: For this tutorial you will need to update your personal server using an ssh/scp client.

WebSockets are a great way to get fast two-way communication working between your unhosted web app and your personal server. It seems the best server-side WebSocket support, at least under nodejs, comes from SockJS (but see also engine.io). Try installing this nodejs script:

var sockjs = require('sockjs'),

fs = require('fs'),

https = require('https'),

config = require('./config.js').config;

function handle(conn, chunk) {

conn.write(chunk);

}

var httpsServer = https.createServer({

key: fs.readFileSync(config.tlsDir+'/tls.key'),

cert: fs.readFileSync(config.tlsDir+'/tls.cert'),

ca: fs.readFileSync(config.tlsDir+'/ca.pem')

}, function(req, res) {

res.writeHead(200);

res.end('connect a WebSocket please');

});

httpsServer.listen(config.port);

var sockServer = sockjs.createServer();

sockServer.on('connection', function(conn) {

conn.on('data', function(chunk) {

handle(conn, chunk);

});

});

sockServer.installHandlers(httpsServer, {

prefix:'/sock'

});

console.log('Running on port '+config.port);

and accompany it by a 'config.js' file in the same directory, like this:

exports.config = {

tlsDir: '/path/to/tls',

port: 1234

};

Start this script with either 'node script.js' or 'forever start script.js', and open this unhosted web app in your browser:

<!DOCTYPE html lang="en">

<html>

<head>

<meta charset="utf-8">

<title>pinger</title>

</head>

<body>

<p>

Ping stats for wss://

<input id="SockJSHost" value="example.com:1234" >

/sock/websocket

<input type="submit" value="reconnect"

onclick="sock.close();" >

</p>

<canvas id="myCanvas" width="1000" height="1000"></canvas>

</body>

<script>

var sock,

graph = document.getElementById('myCanvas')

.getContext('2d');

function draw(time, rtt, colour) {

var x = (time % 1000000) / 1000;//one pixel per second

graph.beginPath();

graph.moveTo(x, 0);

graph.lineTo(x, rtt/10);//1000px height = 10s

graph.strokeStyle = colour;

graph.stroke();

graph.fillStyle = '#eee';

graph.rect(x, 0, 100, 1000);

graph.fill();

}

function connect() {

sock = new WebSocket('wss://'

+document.getElementById('SockJSHost').value

+'/sock/websocket');

sock.onopen = function() {

draw(new Date().getTime(), 10000, 'green');

}

sock.onmessage = function(e) {

var sentTime = parseInt(e.data);

var now = new Date().getTime();

var roundTripTime = now - sentTime;

draw(sentTime, roundTripTime, 'black');

}

sock.onclose = function() {

draw(new Date().getTime(), 10000, 'red');

}

}

connect();

setInterval(function() {

var now = new Date().getTime();

if(sock.readyState==WebSocket.CONNECTING) {

draw(now, 10, 'green');

} else if(sock.readyState==WebSocket.OPEN) {

sock.send(now);

draw(now, 10, 'blue');

} else if(sock.readyState==WebSocket.CLOSING) {

draw(now, 10, 'orange');

} else {//CLOSED or non-existent

draw(now, 10, 'red');

connect();

}

}, 1000);

</script>

</html>

Here is a data URL for it. Open it, replace 'example.com:1234' with your own server name and leave it running for a while. It will ping your server once a second on the indicated port, and graph the round-trip time. It should look something like this:

I have quite unreliable wifi at the place I'm staying now, and even so you see that most packets eventually arrive, although some take more than 10 seconds to do so. Some notes about this:

- The client uses the bare WebSocket exposed by the SockJS server, so that we do not need their client-side library, and mainly because on a connection as slow as mine, it would keep falling back to long-polling, whereas I know my browser supports WebSockets, so I do not need that backdrop.

- I spent a lot of time writing code that tries to detect when something goes wrong. I experimented with suspending my

laptop while wifi was down, then restarting the server-side, and seeing if it could recover. My findings are that it

often still recovers, unless it goes into readyState 3 (

WebSocket.CLOSED). Whenever this happens, I think you will lose any data that was queued on both client-side and server-side, so make sure you have ways of resending that. Also make sure you discard the closed WebSocket object and open up a new one. - The WebSocket will time out after 10 minutes of loss of connectivity, and then try to reconnect. In Firefox it does this with a beautiful exponential backoff. The reconnection frequency slows down until it reaches a frequency of once a minute.

- When you have suspended your device (e.g. closed the lid of your laptop) and come back, you will see it flatlining in blue. Presumably it would do another dis- and reconnect if you wait long enough, but due to the exponential backoff, you would probably need to wait up to a minute before Firefox attempts reconnection. So to speed this up, you can click 'Reconnect'. The line in the graph will go purple (you will see 'sock.readyState' went from 1 (open) to 2 (closing), and a few seconds later it will dis- and reconnect and you are good again. We could probably trigger this automatically, but for now it's good enough.

- There is another important failure situation; my explanation is that this happens when the server closes the connection, but is not able to let the client know about this, due to excessive packet loss. The client will keep waiting for a sign of life from the server, but presumably the server has already given up on the client. I call this "flatlining" because it looks like a flat line in my ping graph. Whenever this happens, if you know that the packet loss problem was just resolved, it is necessary to reconnect. It will take a few seconds for the socket to close (purple line in the graph), but as soon as it closes and reopens, everything is good again. The client will probably always do this after 10 minutes, but you can speed things up this way. This is probably something that can be automated - a script could start polling /sock/info with XHR, and if there is an http response from there, but the WebSocket is not recovering, presumably it is better to close and reopen.

- Chrome and Safari, as opposed to Firefox, do not do the exponential backoff, but keep the reconnection frequency right up. That means that in those browsers you probably never have to explicitly close the socket to force a reconnect. I did not try this in Explorer or Opera.

- Firefox on Ubuntu is not as good at detecting loss of connectivity as Firefox on OSX. This even means the WebSocket can get stuck in

WebSocket.CLOSINGstate. In this case, you have to manually callconnect();from the console, even though the existing WebSocket has not reachedWebSocket.CLOSEDstate yet.

As another example, here's what disconnecting the wifi for 15 minutes looks like:

And this is what "flatlining" looks like - the point where it goes purple is where I clicked "Reconnect".

All in all, WebSockets are a good, reliable way to connect your unhosted web app to your personal server. Next week, as promised, we will use a WebSocket for a Facebook and Twitter gateway running in nodejs. So stay tuned!

5. Facebook and Twitter from nodejs

No Cookie Crew - Warning #3: This tutorial still requires you to update your personal server using an ssh/scp client. Also, to register your personal server with the respective APIs, you need to log in to Twitter and Facebook, accepting their cookies onto your device one last time...

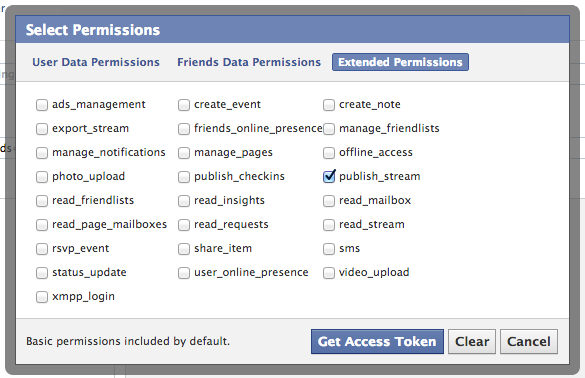

Connecting to Facebook

Connecting to Facebook is easier than you might think. First, of course you need a Facebook account, so register one if you don't have one yet. Then you need to visit the Graph API Explorer, and click 'Get Acces Token'. Log in as yourself, and click 'Get Access Token' a second time to get the dialog for requesting access scopes. In there, go to 'Extended Permissions', and select 'publish_stream'; it should look something like this:

The OAuth dance will ask you to grant Graph API Explorer access to your Facebook account, which obviously you have to allow for this to work.

Once you are back on the Graph API Explorer, you can play around to browse the different kinds of data you can now access. You can also read more about this in Facebook's Getting Started guide. For instance, if you do a POST to /me/feed while adding a field "message" (click "Add a field") with some string value, that will post the message to your timeline.

But the point here is

to copy the token from the explorer tool, and save it in a config file on your personal server. Once you have done that, you can use the

http.Request

class from nodejs to make http calls to Facebook's API. Here is an example script that updates your status in your Facebook timeline:

var https = require('https'),

config = require('./config').config;

function postToFacebook(str, cb) {

var req = https.request({

host: 'graph.facebook.com',

path: '/me/feed',

method: 'POST'

}, function(res) {

res.setEncoding('utf8');

res.on('data', function(chunk) {

console.log('got chunk '+chunk);

});

res.on('end', function() {

console.log('response end with status '+res.status);

});

});

req.end('message='+encodeURIComponent(str)

+'&access_token='+encodeURIComponent(config.facebookToken));

console.log('sent');

};

postToFacebook('test from my personal server');

The result will look something like this:

If in the menu of the Graph API Explorer you click "apps" on the top right (while logged in as yourself), then you can define your own client app. The advantage of this is that it looks slightly nicer in the timeline, because you can set the 'via' attribute to advertise your personal server's domain name, instead of the confusing and incorrect 'via Graph API Explorer':

Normally, you would see the name of an actual application there, for instance 'via Foursquare' or 'via Spotify'. But since we are taking the application out of the server and putting it into the browser, and the access token is guarded by your personal server, not by the unhosted web app you may use to edit the text and issue the actual posting command, it is correct here to say that this post was posted via your personal server.

This means that for everybody who federates their personal server with Facebook, there will effectively be one "Facebook client app", but each one will have only one user, because each user individually registers their own personal gateway server as such.

There is a second advantage of registering your own app: it gives you an appId and a clientSecret with which you can exchange the one-hour token for a 60-day token. To do that, you can call the following nodejs function once, giving your appId, clientSecret, and the short-lived token as arguments:

var https = require('https');

function longLiveMyToken(token, appId, clientSecret) {

var req = https.request({

host: 'graph.facebook.com',

path: '/oauth/access_token',

method: 'POST'

}, function(res) {

res.setEncoding('utf8');

res.on('data', function(chunk) {

console.log(chunk);

});

res.on('end', function() {

console.log('status: '+res.status);

});

});

req.end('grant_type=fb_exchange_token'

+'&client_id='+encodeURIComponent(appId)

+'&client_secret='+encodeURIComponent(clientSecret)

+'&fb_exchange_token='+encodeURIComponent(token)

);

};

Once you run this script on your server, you will see the long-lived token on the console output, so you can paste it from there into your config file. You can also use the Graph API Browser to "Debug" access tokens - that way you see their permissions scope and their time to live. As far as I know you will have to repeat this token exchanging process every 60 days, but maybe there is some way we could automate that. We will worry about that in two months from now. :)

Connecting to Twitter

And just because this is so easy in nodejs, here is the equivalent server-side script for twitter as well:

var twitter = require('ntwitter'),

config = require('./config').config;

var twit = new twitter({

consumer_key: config.twitterConsumerKey,

consumer_secret: config.twitterConsumerSecret,

access_token_key: config.twitterAccessToken,

access_token_secret: config.twitterAccessTokenSecret

});

function postToTwitter(str, cb) {

twit.verifyCredentials(function (err, data) {

if (err) {

cb("Error verifying credentials: " + err);

} else {

twit.updateStatus(str, function (err, data) {

if (err) {

cb('Tweeting failed: ' + err);

} else {

cb('Success!')

}

});

}

});

}

postToTwitter('Sent from my personal server', function(result) {

console.log(result);

}

To obtain the config values for the twitter script, you need to log in to dev.twitter.com/apps and click 'Create a new application'. You can, again, put your own domain name as the app name, because it will be your server that effectively acts as the connecting app. Under 'Setting', set the Application Type to 'Read, Write and Access direct messages', and by default, for the twitter handle by which you registered the app, the app will have permission to act on your behalf.

At the time of writing, there is a bug in ntwitter which means that tweets with apostrophes or exclamation marks will fail. A patch is given there, so if you are really eager to tweet apostrophes then you could apply that, but I haven't tried this myself. I just take this into account until the bug is fixed, and rephrase my tweets so that they contain no apostrophes. :)

A WebSocket-based gateway

The next step is to connect this up to a WebSocket. We simply integrate our postToFacebook and postToTwitter functions into the pinger.js script that we created last week. One thing to keep in mind though, is that we don't want random people guessing the port of the WebSocket, and being able to freely post to your Facebook and Twitter identities. So the solution for that is that we give out a token to the unhosted web app from which you will be connecting, and then we make it send that token each time it wants to post something.

Upload this server-side script, making sure you have the right variables in a 'config.js' file in the same directory. You can run it using 'forever':

var sockjs = require('sockjs'),

fs = require('fs'),

https = require('https'),

twitter = require('ntwitter'),

config = require('./config').config,

twit = new twitter({

consumer_key: config.twitterConsumerKey,

consumer_secret: config.twitterConsumerSecret,

access_token_key: config.twitterAccessToken,

access_token_secret: config.twitterAccessTokenSecret

});

function postToTwitter(str, cb) {

twit.verifyCredentials(function (err, data) {

if (err) {

cb("Error verifying credentials: " + err);

} else {

twit.updateStatus(str, function (err, data) {

if (err) {

cb('Tweeting failed: ' + err);

} else {

cb('Success!')

}

});

}

});

}

function postToFacebook(str, cb) {

var req = https.request({

host: 'graph.facebook.com',

path: '/me/feed',

method: 'POST'

}, function(res) {

res.setEncoding('utf8');

var str = '';

res.on('data', function(chunk) {

str += chunk;

});

res.on('end', function() {

cb({

status: res.status,

text: str

});

});

});

req.end("message="+encodeURIComponent(str)

+'&access_token='+encodeURIComponent(config.facebookToken));

};

function handle(conn, chunk) {

var obj;

try {

obj = JSON.parse(chunk);

} catch(e) {

}

if(typeof(obj) == 'object' && obj.secret == config.secret

&& typeof(obj.object) == 'object') {

if(obj.world == 'twitter') {

postToTwitter(obj.object.text, function(result) {

conn.write(JSON.stringify(result));

});

} else if(obj.world == 'facebook') {

postToFacebook(obj.object.text, function(result) {

conn.write(JSON.stringify(result));

});

} else {

conn.write(chunk);

}

}

}

var httpsServer = https.createServer({

key: fs.readFileSync(config.tlsDir+'/tls.key'),

cert: fs.readFileSync(config.tlsDir+'/tls.cert'),

ca: fs.readFileSync(config.tlsDir+'/ca.pem')

}, function(req, res) {

res.writeHead(200);

res.end('connect a WebSocket please');

});

httpsServer.listen(config.port);

var sockServer = sockjs.createServer();

sockServer.on('connection', function(conn) {

conn.on('data', function(chunk) {

handle(conn, chunk);

});

});

sockServer.installHandlers(httpsServer, {

prefix:'/sock'

});

console.log('up');

And then you can use this simple unhosted web app as your new social dashboard.

Federated or just proxied?

So now you can stay in touch with your friends on Facebook and Twitter, without you yourself ever logging in to either of these walled gardens, the monopoly platforms of web 2.0.

Several people here at Hacker Beach have reacted to drafts of this post, saying that proxying your requests through a server does not change the fact that you are using these platforms. I understand this reaction, but I do not agree, for several reasons:

Separating applications from namespaces.

Many online services offer a hosted web app, combined with a data storage service. But apart from application hosting and data storage, many of them define a "namespace", a limited context that confines who and what you interact with, and a walled garden in which your data "lives".

For instance, the Facebook application will allow you to read about things that happen in the Facebook world, but not outside it. As an application, it is restricted to Facebook's "name space". This means this hosted application gives you a restricted view of the online universe; it is a software application that is specific to only one namespace. As an application, we can say that it is "namespace-locked", very similar to the way in which a mobile phone device can be "SIM-locked" to a specific SIM-card provider.

The way we circumvent this restriction is by interacting with the namespace of a service *without* using the namespace-locked application that the service offers. Namespace-locked applications limit our view of the world to what the application provider wants us to interact with.

So by communicating with the API of a service instead of with its web interface, we open up the possibility of using an "unlocked" application which we can develop ourselves, and improve and adapt however we want, without any restrictions imposed by any particular namespace provider. While using such a namespace-lock-free application, our view of the online world will be more universal and free, and less controlled and influenced by commercial interests.

Avoiding Cookie federation

Both Facebook and Google will attempt to platformize your web experience. Using your server as a proxy between your browser and these web2.0 platforms avoids having their cookies in your browser while you browse the rest of the web.

Your account in each world is only a marionet, you own your identity.

Since you use your own domain name and your own webserver as your main identity, the identities you appear as on the various closed platforms are no longer your main online identity; they are now a shadow, or hologram, of your main identity, which lives primarily on your Indie Web site.

Your data is only mirrored, you own the master copy.

Since all posts go through your server on the way out, you can easily relay posts outside the namespace you originally posted them to, and hold on to them as long as you want in your personal historical data log. You can also easily post the same content to several namespaces at the same time when this makes sense.

What if they retract your API key and kick your "app" off their platform?

That's equivalent to a mailserver blacklisting you as a sender; it is not a retraction of your right to send messages from your server, just (from our point of view) a malfunction of the receiving server.

Conclusion

The big advantage of using a personal server like this is that you are only sending data to each web 2.0 world when this is needed to interact with other people on there. You yourself are basically logged out of web 2.0, using only unhosted web apps, even though your friends still see your posts and actions through these "puppet" identities. They have no idea that they are effectively looking at a hologram when they interact with you.

In order to use Facebook and Twitter properly from an unhosted web app, you will also need things like controlling more than one Twitter handle, receiving and responding to Facebook friend requests, and everything else. These basic examples mainly serve to show you how easy it is to build a personal server that federates seamlessly with the existing web 2.0 worlds.

Also, if you browse through the API documentation of both Twitter and Facebook, you will see all kinds of things you can control through there. So you can go ahead yourself and add all those functions to your gateway (just make sure you always check if the correct secret is being sent on the WebSocket), and then build out this social dashboard app to do many more things.

You may or may not be aware that most other web2.0 websites actually have very similar REST APIs, and when the API is a bit more complicated, there is probably a nodejs module available that wraps it up, like in the case of Twitter here. So it should be possible this way to, for instance, create an unhosted web app that posts github issues, using your personal server of a gateway to the relevant APIs.

Have fun! I moved this episode forward in the series from where it was originally, so that you can have a better feeling of where we are going with all this, even though you still have to do all of this through ssh. Next week we will solve that though, as we add what you could call a "webshell" interface to the personal server. That way, you can use an unhosted web app to upload, modify, and run nodejs scripts on your server, as well as doing any other server maintenance which you may now be doing via ssh. This will be another important step forward for the No Cookie Crew. See you next week: same time, same place!

6. Controlling your server over a WebSocket

No Cookie Crew - Warning #4: This tutorial is the last time you still need to update your personal server using an ssh/scp client.

Web shell

Unhosted web apps are still a very new technology. That means that as a member of the No Cookie Crew, you are as much a mechanic as you are a driver. You will often be using unhosted web apps with the web console open in your browser, so that you can inspect variables and issue javascript commands to script tasks for which no feature exists. This is equally true of the services you run on your personal data server.

So far, we have already installed nodejs, "forever", a webserver with TLS support, as well as gateways to Twitter and to Facebook. And as we progress, even though we aim to keep the personal server as minimal and generic as possible, several more pieces of software and services will be added to this list. To install, configure, maintain, and (last but not least) develop these services, you will need to have a way to execute commands on your personal server.

In practice, it has even proven useful to run a second personal server within your LAN, for instance on your laptop, which you can then access from the same device or from for instance a smartphone within the same wifi network. Even though it cannot replace your public-facing personal server, because it is not always on, it can make certain server tasks, like for instance streaming music, more practical. We'll talk more about this in future posts, but for now suffice to say that if you choose to run two personal servers, then of course you need "web shell" access to both of them in order to administer them effectively from your browser.

There are several good software packages available which you can host on your server to give it a "web shell" interface. One that I can

recommend is GateOne. If you install it on Ubuntu, make sure you

apt-get install dtach, otherwise the login screen will crash repeatedly. But other than that, I have been using it as an

alternative to my laptop's device console (Ctrl-Shift-F1), with good results so far.

The GateOne service is not actually a webshell directly into the server it runs on, but rather an ssh client that allows you to ssh into

the same (or a different) server. This means you will also need to run ssh-server, so make sure all users on there have good

passwords.

Unhosted shell clients

You can host web shell software like this on your server(s), but this means the application you use (the web page in which you type your interactive shell commands) is chosen and determined at install-time. This might be OK for you if you are installing your personal server yourself and you are happy with the application you chose, but it is a restriction on your choice of application if this decision was made for you by your server hosting provider. This problem can be solved by using an unhosted shell client instead of a hosted one. The principle of separating unhosted web apps from minimal server-side gateways and services is of course the main topic of this blog series, so let's also try to apply it here.

A minimal way to implement a command shell service would be for instance by opening a WebSocket on the server, and executing commands that get sent to it as strings. You could put this WebSocket on a hard-to-guess URL, so that random people cannot get access to your server without your permission.

An unhosted web app could then provide an interface for typing a command and sending it, pretty much exactly in the same way as we did for the social dashboard we built last week.

But there are two things in that proposal that we can improve upon. First, we should document the "wire protocol", that is, the exact format of messages that the unhosted web app sends into the WebSocket, and that come back out of it in response. And second, if we open a new WebSocket for each service we add to our personal server, then this can become quite unwieldy. It would be nicer to have one clean interface that can accept various kinds of commands, and that dispatches them to one of several functionalities that run on the server.

Sockethub to the rescue

Nick Jennings, who was also at the the 2012 unhosted unconference, and who is now also here at Hacker Beach, recently received NLnet funding to start a project called Sockethub. It is a redis-based nodejs program that introduces exactly that: a WebSocket interface that lets unhosted web apps talk to your server. And through your server, they can effectively communicate with the rest of the world, both in a sending and a receiving capacity.

So let's have a look at how that would work. The command format for Sockethub is loosely based on ActivityStreams.

In that regard it is very similar to Evan Prodromou's new project, pump.io. A Sockethub command has three required fields:

rid, platform, and verb. Since multiple commands may be outstanding at the same time, the rid (request

identifier) helps to know which server response is a reply to which of the commands you sent. The platform determines which code module will handle

the request. That way, it's easy to write modules for Sockethub almost like writing a plugin for something. And finally, the verb determines which

other fields are required or optional. Some verbs may only exist for one specific platform, but others, like 'post', make sense for multiple platforms, so

their format is defined only once, in a platform-independent way wherever possible.

For executing shell commands, we will define a 'shell' platform that exposes an 'execute' verb. The way to do this in Sockethub is described in the instructions for adding a platform. The API has changed a little bit now, but at the time of writing, this meant adding a file called

shell.js into the lib/protocols/sockethub/platforms/ folder of the Sockethub source tree, with the following content:

var https = require('https'),

exec = require('child_process').exec;

module.exports = {

execute: function(job, session) {

exec(job.object, function(err, stdout, stderr) {

session.send({

rid: job.rid,

result: (err?'error':'completed'),

stdout: stdout,

stderr: stderr

});

});

}

};

We also need to add 'shell' the PLATFORMS variable in config.js, add the 'execute' verb and the 'shell' platform to

lib/protocols/sockethub/protocol.js, and add the 'execute' command to lib/protocols/sockethub/schema_commands.js with a required

string property for the actual shell command we want to have executed. We'll call this parameter 'object', in keeping with ActivityStreams custom:

...

"execute" : {

"title": "execute",

"type": "object",

"properties": {

"object": {

"type": "string",

"required" : true

}

}

},

...

Conclusion

Sockethub was still only a week old when I wrote this episode in January 2013, but it promises to be a very useful basis for many of the gateway functionalities we want to open up to unhosted web apps, such as access to email, social network platforms, news feeds, bittorrent, and any other platforms that expose server-to-server APIs, but are not directly accessible for unhosted web apps via a public cross-origin interface.

Especially the combination of Sockethub with remoteStorage and a web runtime like for instance Firefox OS looks like a promising all-round app platform. More next week!

7. Adding remote storage to unhosted web apps

Apps storing user data on your personal server

So far our unhosted web apps have relied on the browser's "Save as..." dialog to save files. We have set up our own personal server, but have only used it to host a profile and to run Sockethub so that we can relay outgoing messages through it, to the servers it federates with. This week we will have a look at remoteStorage, a protocol for allowing unhosted web apps to use your personal server as cloud storage.

The protocol by which a client interacts with a remoteStorage server is described in draft-dejong-remotestorage-03, an IETF Internet-Draft that is currently in version -03. At its core are the http protocol, TLS, and CORS headers.

A remoteStorage server allows you to store, retrieve, and remove documents in a directory structure, using http PUT, GET, and DELETE verbs, respectively. It will respond with CORS headers in the http response, so that your browser will not forbid your web app from making requests to the storage origin.

As an app developer, you will not have to write these http requests yourself. You can use the remotestorage.js library (which now also has experimental support for cross-origin Dropbox and GoogleDrive storage).

Reusing reusable user data

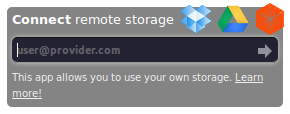

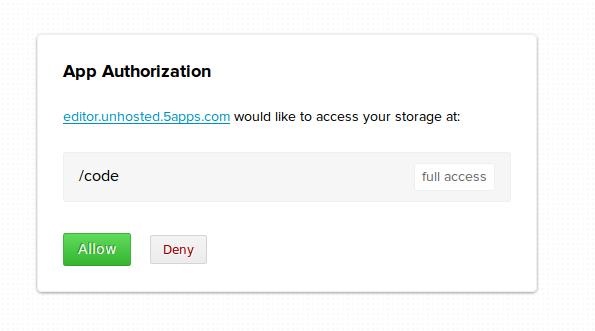

The remotestorage.js library is divided up into modules (documents, music, pictures, ...), one for each type of data. Your app would typically only request access to one or two such modules (possibly read-only). The library then takes care of displaying a widget in the top right of the window, which will obtain access to the corresponding parts of the storage using OAuth2's Implicit Grant flow:

The Implicit Grant flow is special in that there is no requirement for server-to-server communication, so it can be used by unhosted web apps, despite their lack of server backend. The OAuth "dance" consists of two redirects: to a dialog page hosted by the storage provider, and back to the URL of the unhosted web app. On the way back, the storage provider will add an access token to the URL fragment, so the part after the '#' sign. That means that the token is not even sent to the statics-server that happens to serve up the unhosted web app. Of course the app could contain code that does post the data there, but at least such token leaking would be detectable.

By default, OAuth requires each relying party to register with each service provider, and the remoteStorage spec states that servers "MAY require the user to register applications as OAuth clients before first use", but in practice most remoteStorage servers allow their users full freedom in their choice of which apps to connect with.

As an app developer you don't have to worry about all the ins and outs of the OAuth dance that goes on when the user connects your app to their storage. You just use the methods that the modules expose.

Since there are not yet a lot of modules, and those that exist don't have a lot of methods in them yet, you are likely to end up writing and contributing (parts of) modules yourself. Instructions for how to do this are all linked from remotestorage.io.

Cloud sync should be transparent

As an app developer, you only call methods that are exposed by the various remotestorage.js modules. The app is not at all concerned with all the data synchronization that goes on between its instance of remotestorage.js, other apps running on the same device, apps running on other devices, and the canonical copy of the data on the remoteStorage server. All the sync machinery is "behind" each module, so to speak, and the module will inform the app when relevant data comes in.

Since all data your app touches needs to be sent to the user's remoteStorage server, and changes can also arrive from there without prior warning, we have so far found it easiest to develop apps using a variation on what emberjs calls "the V-model".

Actions like mouse clicks and key strokes from the user are received by DOM elements in your app. Usually, this DOM element could determine by itself how it should be updating its appearance in reaction to that. But in the V-model, these actions are only passed through.

The DOM element would at first leave its own state unchanged, passing the action to the controller, to the javascript code that implements the business logic and determines what the result (in terms of data state) of the user action should be. This state change is then effectuated by calling a method in one of the remoteStorage modules. So far, nothing still has changed on the screen.

If we were to completely follow the V-model, then the change would first move further down to the storage server, and then ripple all the way back up (in the shape of a letter V), until it reaches the actual DOM elements again, and updates their visual state.

But we want our apps to be fast, even under bad network connections. In any case, waiting an entire http round-trip time before giving the user feedback about an action would generally take too long, even when the network conditions are good.

This is why remoteStorage modules receive change events about outgoing changes at the moment the change travels out over the wire, and not after the http request completes. We jokingly call this concept Asynchronous Synchronization: the app does not have to wait for synchronization to finish; synchronization with the server happens asynchronously, in the background.

Two easy ways to connect at runtime

We mentioned that the remoteStorage spec uses OAuth to allow a remotestorage.js module to get access, at runtime, to its designated part of the user's storage. To allow the remotestorage.js library to make contact with the user's remoteStorage server, the user inputs their remoteStorage address into the widget at the top right of the page. A remoteStorage address looks like an email address, in that it takes the form 'user@host'. But here of course 'host' is your remoteStorage provider, not your email provider, and 'user' is whatever username you have at that provider.

The protocol that is used to discover the connection details from this 'user@host' string is called webfinger. Luckily, webfinger supports CORS headers, so it can be queried from an unhosted web app without needing to go through a server for that. We actually successfully campaigned for that support last year once we realized how vital this is for unhosted web apps. It is very nice to see how the processes around open standards on the web actually allowed us to put this issue on the agenda in this way.

So the way this looks to the user is like this:

- Click 'connect remoteStorage'

- Type your 'user@host' remoteStorage address into the widget

- Log in to your remoteStorage provider (with Persona or otherwise)

- Review which modules the app requests

- If it looks OK, click 'Accept'

- You are back in the app and your data will start appearing

We call this the 'app first flow'. Steps 4 and 5 will look something like this (example shown is 5apps):

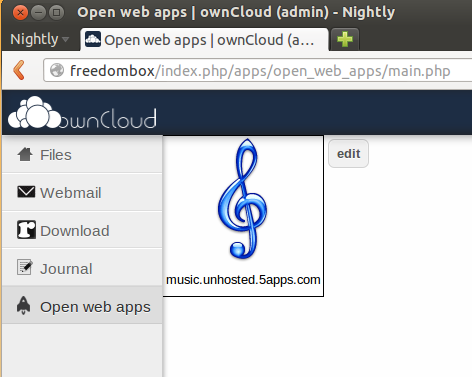

Another way to connect an unhosted web app and a remoteStorage account at runtime is if you have an app launch panel that is already linked to your remoteStorage account. You will get this for instance when you install the ownCloud app. Apps will simply appear with their icons in the web interface of your storage server, and because you launch them from there, there is no need to explicitly type in your remoteStorage address once the app opens.

We call this the 'storage first flow', François invented it this summer when he was in Berlin. The launch screen will probably look a bit like the home screen of a smartphone, with one icon per app (example shown is ownCloud):

Featured apps

The remotestorage.js library had just reached its first beta version (labeled 0.7.0) at the time of writing (it is now at version 0.10), and we have collected a number of featured apps that use this version of the library. So far, I am using the music, editor and grouptabs apps for my real music-listening, text-editing, and peer-to-peer bookkeeping needs, respectively.

There is also a minimal writing app on there called Litewrite, a video-bookmarking app called Vidmarks, a generic remoteStorage browser, and a number of demo apps. If you don't have a remoteStorage account yet, you can get one at 5apps. For more options and more info about remoteStorage in general, see remotestorage.io.

After you have used the todo app to add some tasks to your remote storage, try out the unhosted time tracker app. You will see it retrieve your task list from your remote storage even though you added those tasks using a different app. So that is a nice first demonstration of how remoteStorage separates the data you own from the apps you happen to use.

Remote storage is of course a vital piece of the puzzle when using unhosted web apps, because they have no per-app server-side database backend of themselves. The remoteStorage protocol and the remotestorage.js library are something many of us have been working on really hard for years, and since last week, finally, we are able to use it for a few real apps. So we are really enthusiastic about this recent release, and hope you enjoy it as much as we do! :)

8. Collecting and organizing your data

As a member of the No Cookie Crew you will be using unhosted web apps for everything you currently use hosted web apps for. None of these apps will store your user data, because they do not have a server backend to store it on. You will have to store your data yourself.

Collecting and organizing your own data is quite a bit of work, depending on how organized you want to be about it. This work was usually done by the developers and system administrators of the hosted web apps you have been using. The main task is to "tidy your room", like a kid putting all the toys back in the right storage place inside their bedroom.

Collecting your data from your own backups

We sometimes like to pretend that the Chromebook generation is already here, and that all our data is in the cloud. In reality, obviously, a lot of our data is still just on our laptop hard disk, on external hard disks, on USB sticks, and on DVDs that we created over the years.

The first step towards making this data usable with unhosted web apps is to put it all together in one place. When I started doing this, I realised I have roughly four types of data, if you split it by the reason why I'm keeping it:

- Souvenirs: mainly folders with photos, and videos. I hardly ever access these, but at the same time they are in a way the most valuable, since they are unique and personal, and represent powerful memories.

- Products: things I published in the past, like for instance papers I wrote as a student, code I published over the years, and the content of my website. In several cases I have source files that were not published as such, for instance original vector graphics files of designs that were published only as raster images, or screen-printed onto a T-shirt. It's all the original raw copies of the things that took me some effort to create, and that I may want to reuse in the future.

- Media Cache: my music collection, mostly. It used to be that when you lost a CD, you could no longer listen to it, but for all but the most obscure and rare albums, this is no longer the case, and should you lose this data, then it's easy to get it back from elsewhere. The only reason you cache it is basically so that you don't have to stream it.

- Working Set: the files I open and edit most often, but that are part of a product that is not finished yet, or that (unlike souvenirs) have only a temporary relevance.

Most work goes into pruning the ever growing Working Set: determining which files and folders can be safely deleted (or moved to the "old/" folder), archiving all the source files of products I've published, and deciding which souvenirs to keep and which ones really are just more of the same.

My "to do" list and calendar clearly fit into "Working Set", but the one thing that doesn't really fit into any of this is my address book. It is at the same time part of my working set, my products, and my souvenirs. I use it on a daily basis, but many contacts in there are from the past, and I only keep them just in case some day I want to reuse those contacts for a new project, and of course there is also a nostalgic value to an address book.

Some stuff on your Indie Web server, the rest on your FreedomBox server

At first, we had the idea of putting all this data of various types into one remoteStorage account. This idea quickly ran into three problems: first, after scanning in all my backup DVDs, even after removing all the duplicate folders, I had gathered about 40 Gigs, and my server only has 7 Gigs of disk space. It is possible to get a bigger server of course, but this didn't seem like an efficient thing to spend money on, especially for the Media Cache data.

Second, I am often in places with limited bandwidth, and even if I would upload 40 Gigs to my remote server, it would still be a waste of resources to try to stream music from there for hours on end.

Third, even though I want to upload my photos to a place where I can share them with friends, I have a lot of my photos in formats that take up several Megabytes, and typically photos you share online would probably be more around 50 K for good online browsing performance. So even if I upload all my photos to my Indie Web server, I would want to upload the "web size" version of them, and not the huge originals.

After some discussion with Basti and François, I concluded that having one remote storage server is not enough to cover all use cases: you need two. The "Indie Web" one should be always on, on a public domain name, and the "FreedomBox" one should be in your home.

On your Indie Web server, you would store only a copy of your Working Set data, plus probably sized down derivatives of some of your products and souvenirs. On your FreedomBox you would simply store everything.

This means you will already have to do some manual versioning, probably, and think about when to upload something to your Indie Web

server. At some point we will probably want to build publishing apps that connect to both accounts and take care of this, but for now, since

we have webshell access to both servers, we can do this with a simple scp command.

FreedomBox and the remoteStorage-stick

The topic of having a data server in your home brought up the work Markus and I did on combining the FreedomBox with remoteStorage. Our idea was to split the FreedomBox into the actual plug server and a USB drive that you stick into it, for the data storage. The two reasons for splitting the device this way are that it makes it clear to the user where their data is and how they can copy it and back it up, and that it makes tech support easier, since the device contains no valuable data, and can thus easily be reset to factory settings.

To allow this FreedomBox to also serve a public website, we would sell a package containing:

- the plug server

- the 'remoteStorage-stick'

- a Pagekite account to tunnel through

- a TLS certificate for end-to-end encryption

- tokens for flattering unhosted web apps in the 5apps store

There is still a long way to go to make this a sellable product, but it doesn't hurt to start experimenting with its architecture ourselves first, which is why I bought a usb drive, formatted it as ext4 with encryption, and mounted it as my 'remoteStorage' stick. It's one of those tiny ones that have the hardware built into the connector and don't stick out, so I leave it plugged in to my laptop by default.

Putting your data into your remoteStorage server

By way of FreedomBox prototype, I installed ownCloud on my localhost and pointed a 'freedombox' domain to 127.0.0.1 in my /etc/hosts.

To make your data available through a remoteStorage API, install one of the remoteStorage-compatible personal data servers listed under 'host your own storage'

on remotestorage.io/get/. I went for the ownCloud one here, because (like the php-remoteStorage one) it maps data

directly onto the filesystem (it

uses extended attributes to store the Content-Types). Nowadays you would probably go for

reStore instead, because it's easier to get working. If you just want to get started quickly with remoteStorage, try the starter-kit. This means you can just import data onto your remoteStorage account by copying it onto your

remoteStorage-stick. Do make sure your remoteStorage-stick is formatted with a filesystem that supports extended file attributes.

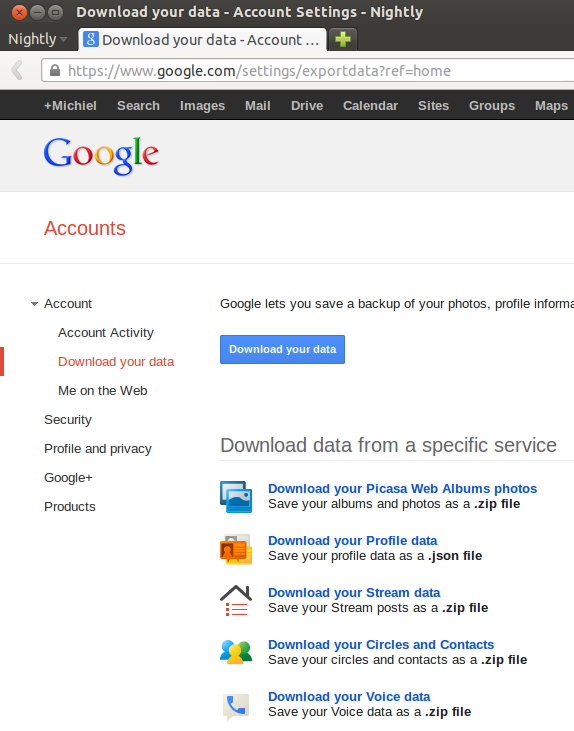

Export your data from Google, Facebook and Twitter

No Cookie Crew - Warning #5: You will have to log in to these services now, because apart from Twitter, they do not offer this same functionality through their APIs.

This part is quite fun: Data Liberation! :) It is actually quite easy to download all your data from Google. This is what it looks like:

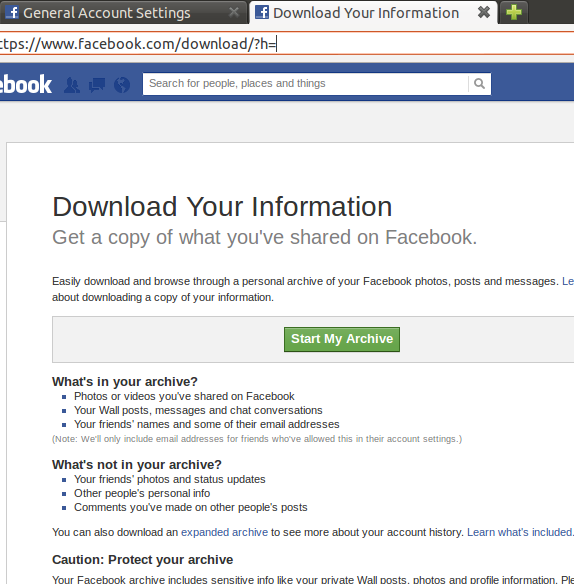

Facebook offers a similar service, even though the contacts export is quite rudimentary, but you can export at least your photos:

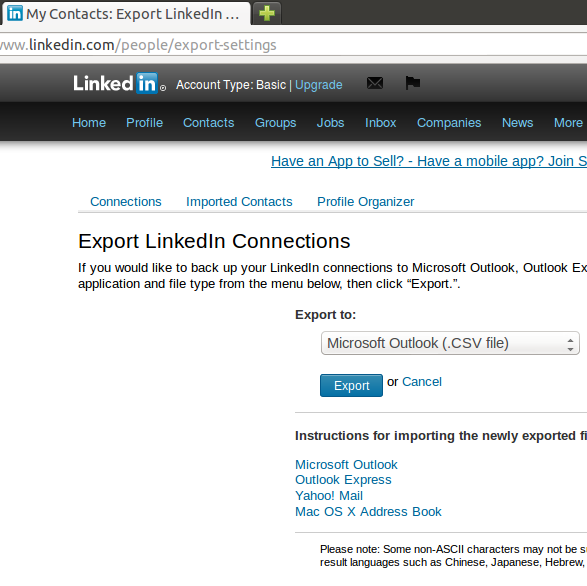

And this is what the LinkedIn one looks like:

For twitter, tweet data is mostly transient, so there is not much point probably in exporting that. Instead, you could start saving a copy of everything you tweet through sockethub onto your remoteStorage from now on. But to export your contacts, if there are not too many, you can simply scrape https://twitter.com/following and https://twitter.com/followers by opening the web console (Ctrl-Shift-K in Firefox), and pasting:

var screenNames = [],

accounts = document.getElementsByClassName('account');

for(var i=0; i<accounts.length; i++) {

screenNames.push(

accounts[i].getAttribute('data-screen-name'));

}

alert(screenNames);

Do make sure you scroll down first to get all the accounts in view. You could also go through the api of course (see episode 5), and nowadays Twitter also lets you download a zip file from the account settings.

Converting your data to web-ready format

Although you probably want to keep the originals as well, it makes sense to convert all your photos to a 50Kb "web size". If you followed episode 3, then you have an Indie Web server with end-to-end encryption (TLS), so you can safely share photos with your friends by uploading them to unguessable URLs there. It makes sense to throttle 404s when you do this, although even if you don't, as long as the URLs are long enough, it is pretty unlikely that anybody would successfully guess them within the lifetime of our planet.

In order to be able to play music with your browser, you need to convert your music and sound files to a format that your browser of

choice supports, for instance ogg. On unix you can use the avconv tool for this (previously known as ffmpeg):

for i in `ls */*/*` ; do

echo avconv -i $i -acodec libvorbis -aq 60 \

~/allmydata/mediaCache/$i.ogg ;

done | sh

To convert your videos to ogg, you could try something like:

for i in `ls *.AVI` ; do

echo avconv -i $i -f ogg \

~/allmydata/souvenirs/$i.ogg ;

done | sh